The purpose

of Lab 4 is to enhance one's skills in:

1) Image

delineation methods (subsetting with and without a shapefile).

2) Spatial

enhancement of an image (pan-sharpen).

3)

Radiometric Enhancement (haze reduction).

4) Linking

to and using Google Earth as a source of ancillary information.

5)

Introductory image-resampling methods (Nearest Neighbor and Bilinear

Interpolation).

Part 1. Image Delineation Methods:

Subsetting without a shapefile: This portion of the lab will use the

Erdas Imagine 2013 Inquire Box to subset an area of interest (AOI).

Step1: Open

the image you wish to subset from in the Erdas viewer. In this case it is eau_claire_2011.img.

Step 2: Click

on the Raster tab in the Erdas

viewer to activate the raster tools.

Step 3:

Right-click on the image and select Inquire

Box.

Step 4: The Inquire Box should appear on the

screen. Use the mouse to adjust the parameters of the box to the appropriate

AOI. In this case the box is covering the areas encompassing Eau Claire and

Chippewa Falls, WI.

Step 5:

Click Apply in the Inquire Box dialogue.

Step 6: Click

Subset & Chip from the Raster Tools in the Erdas screen

followed by Create Subset Image from

the dropdown menu. This creates a Subset

dialogue box with the input file’s name already entered.

Step 7: a) Click

on the folder icon below the output file section of the dialogue box.

b) Now, select the appropriate

location for the output image to appear.

c) Once this is done, choose a

name for the output file. In this case the file’s

name is eau_claire_2011sb_ib.img.

name is eau_claire_2011sb_ib.img.

d) Click on From Inquire Box. This should synchronize the ULX, LRX, ULY, and

LRY coordinates between the Subset and Inquire Box dialogues.

e) Click OK in the Subset dialogue

box and leave all other parameters at their default values. Doing this will create

the desired subset.

f) Click Dismiss and then close the Process

List view.

Step 8:

Clear the original image from the Erdas viewer and bring in the subset one that

was just created, shown in figure 1.

Figure 1

shows the original image (eau_claire_2011.img) with the Inquire Box over the

desired AOI. The extent of this image is directly set when the user expands or

contracts the inquire box in the Erdas viewer over an area of the larger,

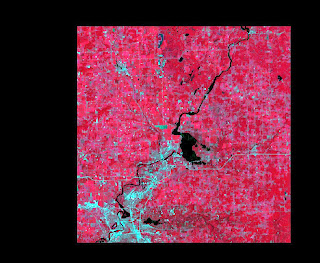

original image. In contrast, figure 2 shows the image that was created using

the parameters established by the Inquire Box (eau_claire_2011sb_ib.img).

Figure 1.

Figure 2.

Figure 2 shows the image of the

AOI that was created in Erdas 2013 using the Inquire Box subset method (eau_claire_2011sb_ib.img).

This image correlates with the inquire box in the upper right hand corner of

figure 1.

Step 1: Open

the file eau_claire_2011.img in the

Erdas 2013 viewer.

Step 2: A

counties shapefile will now be brought into the viewer and overlay the first

image. In this case the file is ec_cpw_cts.shp.

Step 3: Hold

down the Shift key on the keyboard

and select the two counties; this should change them from purple to yellow.

Step 4:

Click Home at the top of the Erdas

tools menu, then select Paste From

Selected Object from the menu (the clipboard to the right of the Paste key). This will create a series

of dotted lines around the counties that were selected and indicate the

boundaries of the new AOI.

Step 5:

Click File, Save as [AOI Layer]. Navigate

to the appropriate folder and name the AOI. In this case the name is ec_cpw_cts.aoi.

Step 6: Click

on Raster then Subset & Chip.

Step 7: a) Click

on the folder icon beside the output section of the subset dialogue box and go

to the folder where the new AOI is to be saved. In this case the output file is

ec_cpw_2011sb_ai.img.

b) Click on the AOI button. A new dialogue box (Choose AOI) will open.

c) In the Choose AOI dialogue box choose AOI

File as the AOI source. Navigate to the

AOI file. In this case it is ec_cpw_cts.aoi.

AOI file. In this case it is ec_cpw_cts.aoi.

d) Leave all default parameters as

they are and click OK.

Figure 3

shows the AOI that was created using the procedures above. In this case the AOI

is of Eau Claire and Chippewa Counties (Wisconsin) which was created with the

appropriate shapefiles defining its extent. Inquire Box AOIs, like the one in

figure 2, are not very useful in remote sensing as they are limited in shape to

squares and rectangles.

Figure 3.

Figure 3 was created from the same image found in figure 1. However, county shapefiles were applied to distinguish the AOI.

Figure 3 was created from the same image found in figure 1. However, county shapefiles were applied to distinguish the AOI.

Part 2: Image Fusion: This section will merge one with

coarse spatial resolution (30m2) to a finer one (15m2).

This technique is known as pan-sharpening.

Step 1:

Bring the coarse-resolution image into the Erdas Viewer; in this case the

reflective image: ec_cpw_2000.img.

Step 2: Open

a second viewer in Erdas and bring in the panchromatic image with finer

resolution (ec_cpw_2000pan.img).

Step 3:

Click on the Raster menu.

Step 4:

Click on Pan Sharpen in the raster

tool menu and then Resolution Merge

from the

resulting drop-menu.

Step 5: A Resolution Merge dialogue box should

now open.

a) Make sure the ec_cpw_2000pan.img is in the High

Resolution Input File and

ec_cpw_2000.img is in the Multispectral

Input File.

b) Click on the folder icon beside the Output File section. Go to the

appropriate folder and name the new file ec_cpw_200ps.img.

c) Back in the Resolution Merge dialogue box, click Multiplicative under Method

and Nearest Neighbor under Resampling Techniques.

d) Leave the rest of the parameter at

their defaults and click OK.

e) Once the resolution merge model has

finished running, click dismiss.

Figure 4 shows the original images before

they underwent resolution merge. These two images, with the multispectral one

on the left and the panchromatic on the right, were used to create a

pan-sharpened image, illustrated in figure 5. Pan-sharpening enables the

merging of a multispectral image with coarse spatial resolution to a

panchromatic one with finer resolution.

Figure 4 shows the multispectral image, ec_cpw_2000.img (left), and the panchromatic image, ec_cpw_2000pan.img (right) prior to being merged in the Erdas 2013 viewer.

Figure 5.

Figure 5 shows the pan-sharpened

image created in the Erdas 2013 viewer following the steps outlined in section

2 of this lab.

Q1. Outline the difference you observed between your reflective image

and your pan-sharpened one.

The

pan-sharpened (PS) image of the EC/CF area has much better spatial resolution

than the original multi-spectral (MS) image (15 meters and 30 meters, respectively).

Because of this, one is able to zoom in much closer in the PS image before

blurring occurs as opposed to the MS image. Also, the increased spatial

resolution in the PS image makes its colors more vivid than in the MS image.

This allows for easier identification of farm fields as they appear to contrast

with one another more in the PS image compared to the MS image.

Also, by

exploring the metadata of the original files and that of the PS file is much

larger than the original. For instance, the file size of the PS image is 674.94

MB compared to 42.59 MB for the multispectral image and 27.47 MB for the

original panchromatic image.

Part 3: Image Enhancement: The Erdas

Haze Reduction tool will be used in this section to improve the spectral and

radiometric quality of the given image. In this case, the image is eau_claire_2007.img which is

illustrated in figure 6 (left) alongside the image that was produced after the

haze reduction tool was applied (ec_2007_haze_r.img;

right).

Step 1:

Bring the image on which the haze reduction tool is to be applied to into Erdas

2013 viewer. In this case the image is eau_claire_2007.img.

Step 2: Go

to the Erdas Raster tools and select Radiometric

and click on Haze Reduction in

the resulting drop-menu.

Step 3: A haze

reduction dialogue box should now open. The file currently open in the Erdas

viewer should appear as the input file.

a) Go to the folder icon beside the

output file section and navigate to the desired output location. Name the new

image ec_2007_haze_r.img.

b) Accept all default values in the haze

reduction dialogue box and click OK

to run the model.

c) When the model is done running, click

Dismiss and close the window.

Step 3: Open

a new view in Erdas and compare the original image and the one that underwent

the haze reduction processing.

Q2. Outline the differences you observed in

between the original image and the haze deduction one.

The first

noticeable difference between the two images is that the one on which the

haze-reduction tool was used (HR) is much clearer than the original. Figure 6

shows both the haze reduced image and the original on the right and left,

respectively. Comparing the metadata

between the two images one can see that brightness was uniformly increased

throughout the pixels that registered as 0 in the original to 69 in the HR

version. However, pixels that had assigned values in the original had

brightness values subtracted from them in the corresponding rows/columns of the

HR version.

Also, comparing the statistics in the HR

version to the original shows, respectively: mean = 66.304: 47.0; StDev.=

6.776: 32.522; median= 67: 62; and mode= 69: 0.

The Erdas 2013 haze reduction tool was applied to eau_claire_2007.img (left). The radiometrically enhanced image, ec_2007_haze_r.img, is shown on the right side of the figure in the Erdas 2013 Viewer.

Step 1:

Bring the image that is to be linked with Google Earth into the Erdas 2013

viewer. In this case it is eau_claire_2011.img.

Step 2: Fit

the image to frame and click on Google

Earth on the top of the Erdas Viewer tool menu.

Step 3:

Click on the Connect to Google Earth tool.

Once the Google Earth window opens, move it to another monitor.

Step 4:

Click on Match GE to View in the tool menu. This will link the original image

to Google Earth.

Step 5:

Synchronize the Google Earth to the original view in the Erdas interface. To do

this click on the Synch GE to View

in the tool bar.

Now, when

one pans or zooms in/out of the eau_claire_2011.img it should be duplicated on

the Google Earth image in the window on the second monitor.

Q3. Can the Google Earth view serve as an image interpretation key? Why

or why not?

It would probably be appropriate to use

Google Earth (GE) as an interpretation key for certain nominal information like

place-names (if none are given on the image being interpreted). The only issue

with using GE as an interpretation key beyond place-names would have to do with

the accuracy of the information. Unless there are explicit sources for the

creation and interpretation of data on presented on the GE viewer, then I would

not expect the use of such information to be appropriate.

Q4. If so, what type of interpretation key is it?

If used to

identify place-names, GE would be classified as a selective key. This is

because the image interpreter would select the features found on GE that best

match those found in their original one.

Part 5: Resampling: Resampling is an image

interpretation technique which applies an algorithm to create a new image with

different pixel sizes. However, the changing of pixel sizes does not change the

spatial resolution of the image. The purpose of resampling is to correct the images

geometrically and to synchronize two images from different sensors that have

different pixel sizes.

Two methods of resampling will be used in

this section: nearest neighbor (NN) and bilinear interpolation (BLI). NN uses

brightness values from the closest pixels between the input images to assign

brightness values in the output image. In contrast, BLI uses brightness values

of the four closest pixels in the input image to calculate the output image’s

pixel values, from Wilson (2012).

Nearest Neighbor:

Step 1:

Bring the image to be resampled into the Erdas 2013 viewer. In this case it is eau_claire_2011.img.

Step 2:

Click on Raster in the menu bar.

Step 3:

Click Spatial in the raster tool

menu, followed by Resample Pixel Size.

This will result in the opening of a Resample window.

Step 4 (NN):

The name of the file currently in the Erdas viewer should appear in the input file section of the resample

window.

a) Click on the folder icon beside the

output file section of the Resample window.

b) Navigate to the appropriate output

folder and type the output file’s name, in this case it is eau_claire_nn.img, and click OK.

c) Under Resample Method in the resample window, choose the default setting

of nearest neighbor.

d) Change the Output Cell Size from 30

to 20 meters (resampling up) for

both x- and y-cells.

e) Accept the remaining defaults and

click OK to run the model.

Bilinear Interpolation:

Steps 1

through 3 can be repeated in order to resample an image using BLI. However, the

following changes demonstrated in Step 4

(BLI) are essential to creating an image resampled with the BLI method.

Step 4

(BLI): The name of the file currently in the Erdas viewer should appear in the

input file section of the resample window.

a) Click on the folder icon beside the

output file section of the Resample window.

b) Navigate to the appropriate output

folder and type the output file’s name, in this case it is eau_claire_bli.img,

and click OK.

c) Under Resample Method in the resample window, choose the default setting

of bilinear interpolation.

d) Change the Output Cell Size from 30

to 20 meters (resampling up) for

both x- and y-cells.

e) Accept the remaining defaults and

click OK to run the model.

Q5. Is there any difference in the appearance of the original image and

the resampled image generated using nearest neighbor resampling method? Explain

your observations.

The

differences between the nearest neighbor (NN) and the original image are not

very stark. For instance, even though the pixel size of the NN image has been decreased

by 10m2 the output image appeared somewhat blurry compared to the

original, especially when zooming in (note: Looking at the metadata for the

output image proved that 20m2 pixel size was achieved in the NN

image).

Q6. Is there any difference in the appearance of the original image and

the resampled image generated using the bilinear interpolation resampling

method? Explain your observations.

The bilinear

interpolation image (BLI) is much smoother than both the NN and the original

image. According to the Erdas Field Guide (1999), the BLI method produces a more

spatially accurate image than NN while compromising radiometric extremes due to

the averaging of pixel data.

Work Cited

Retrieved from:

https://uwec.courses.wisconsin.edu/d2l/lms/content/home.d2l?ou=2187799

on1 November, 2013.

1 November, 2013.

No comments:

Post a Comment